Self Replication to Preserve Innate Learned Structures in Artificial Neural Networks

It’s interesting to investigate combination of deep neuro-evolution and self-replication1 to evolve Artificial Neural Networks (ANNs) able to keep and complexify innate learned structures aimed to fulfill auxiliary tasks (orthogonal to the self-replication).

In such a way, it may became possible to build evolutionary lineage tree of ANNs specialized to complete specific tasks under different environmental settings through subsequent training sessions. And the knowledge acquired during all these training sessions will accumulate not only in the form of learned connections’ weights, but in a neural network’s topology as well.

Subsequently, with appropriate orchestration of resulting intelligent agents it can be possible to produce swarm intelligence with much higher order of complexity than of each individual agent, able to adequately solve imperfect knowledge problems through weighted consensus among all participants.

Biological life began with the first self-replicator, and natural selection kicked in to favor organisms that are better at replication, resulting in a self- improving mechanism. Analogously, we can construct a self-improving mechanism for artificial intelligence via natural selection, if AI agents had the ability to replicate and improve themselves without additional machinery.1

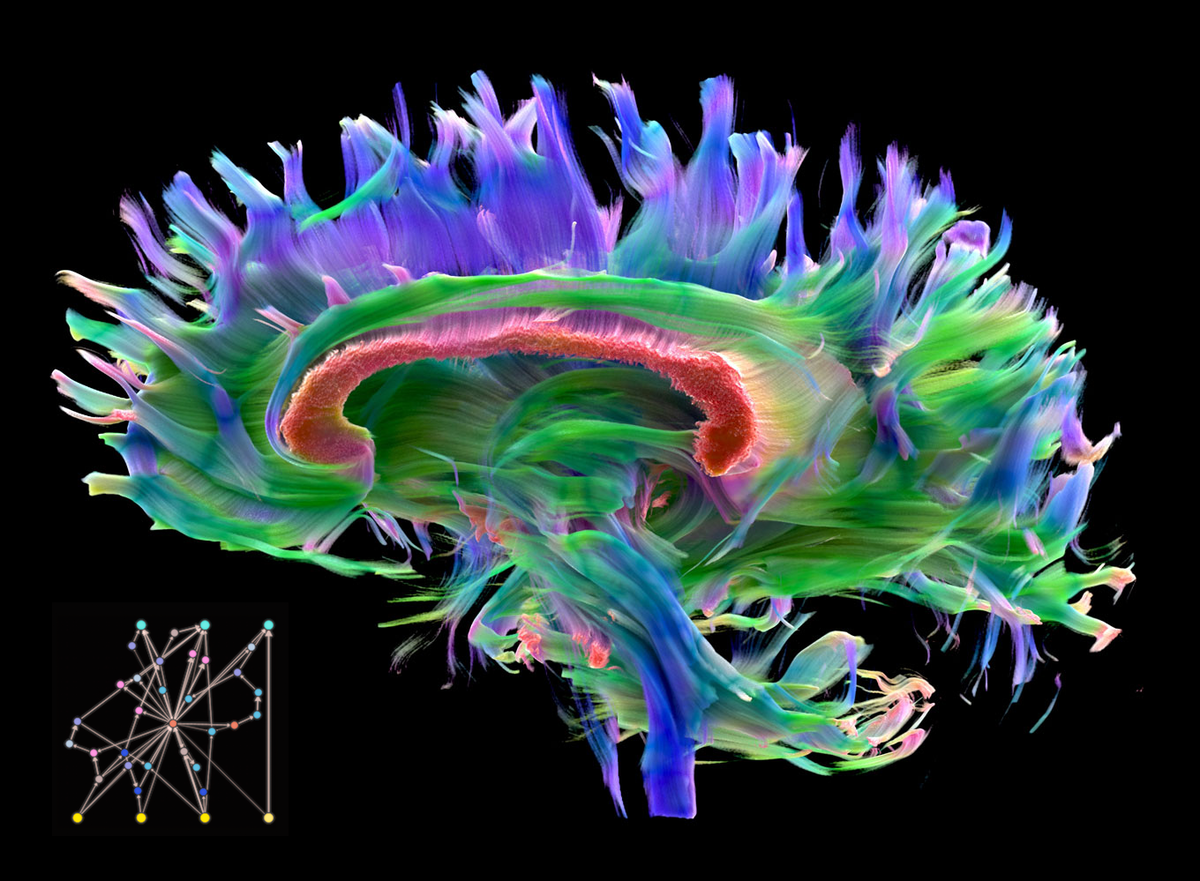

Numerous recent studies have shown that various brain structures include innate knowledge seeds that explode into inalienable abilities of living organisms to learn or complete specific tasks right from the first seconds of immediate life experience.

Over 90% of our genes are expressed in the development of the brain, and a significant number of those are expressed selectively, in a way that allows the brain to self-assemble, even, to some non-trivial degree in the absence of experience. Mechanisms such as cell division, cell differentiation, cell migration, cell death, and axon guidance combine to self-assemble a rich first draft of the human brain, even prior to experience. Even in the absence of synaptic transmission, the primary mechanism by which experience is conveyed to the brain, the basic structure of the newborn brain is preserved.2

This demonstrates that different brain structures can be regarded to some extent as a swarm of intelligent agents that effectively combine weighted responses from different regions, from cortex to amygdala, in order to build an adequate complex response on the external stimuli created by the environment. It is also worth noting that different human brain structures was inherited from earlier inhuman forms of life, and we even have brain structures related to prehistoric reptiles. Thus, the development of innate brain structures can be regarded as a long-term evolutionary process of complexification from the most basic structures controlling autonomic peripheral neural system to the most complex structures supporting abstract reasoning. Such kind of increasing complexification allows evolution not to get stuck on local optima and to develop even more complex self-organizing structures through dissipative adaptation3.

Life manages to squeeze exquisite reliability in behaviour on large scales from a jittery herd of individual molecules, without always needing to put each atom in its place, and we might therefore feel encouraged to attempt something similar in our own feats of engineering.3

This point of view allows us to consider the evolution of synthetic intelligence in a similar way. The main idea is to simulate the process of natural selection that influences evolution of the life forms, by creating of specific multi-staged environment that force neuro-evolution to generate highly specialized ANNs that can survive as a swarm. The training multi-stage environment must produce multiple challenges with increasing survival pressure. The key point here is the creation at each stage of an environmental challenge with ever increasing amount of available training signals. This will allow to evolve intelligent systems with innate knowledge of environment from it’s most basic form to a comprehensive understanding of the whole. The search for an evolutionary champion at each training stage can be carried out using a novelty search4 method that allows to explore all available survival options and to find the best fit among them.

By combining orthogonal goals such as self-replication and survival in an increasingly complex environment, it’s possible to create adaptive pressure on the ANN’s evolutionary tree that will ignite the generation of complex systems with innate structures, capable to quickly learn new properties of the environment from the first moments of direct experience. As an added bonus, it will also sustain life-long learning of produced intelligent systems, by effectively incorporating new experiences into innate structures.

Self-replication involves a degree of introspection and self-awareness, which is helpful for lifelong learning and potential discovery of new neural network architectures.1

The resulting swarm of intelligent agents can become a foundation for creation of the Artificial General Intelligence (AGI) with innate structures that allow to generalize knowledge about new experiences and environmental challenges.

~

The repost of the original article posted by author at Medium: Self-replication to preserve innate learned structures in Artificial Neural Networks

References: 1234567

-

Oscar Chang, Hod Lipson, Neural Network Quine, arXiv preprint: 1803.05859v3, 2018 ↩︎ ↩︎ ↩︎ ↩︎

-

Gary Marcus, Innateness, AlphaZero, and Artificial Intelligence, arXiv preprint: 1801.05667v1, 2018 ↩︎ ↩︎

-

Jeremy L. England, Dissipative adaptation in driven self-assembly, Nature Nanotechnology volume 10, pages 919–923, 2015 ↩︎ ↩︎ ↩︎

-

Joel Lehman, Evolution through the search for novelty, B.S. Ohio State University, 2007 ↩︎ ↩︎

-

Robert Marsland III, Jeremy L. England, Speed, strength and dissipation in biological self-assembly, arXiv preprint: 1711.02172v1, 2017 ↩︎

-

Sumantra Sarkar, Bin Wang, Jeremy L. England, Design of conditions for emergence of self-replicators, arXiv preprint: 1709.09191v2, 2018 ↩︎

-

Iaroslav Omelianenko, Neuroevolution — evolving Artificial Neural Networks topology from the scratch, Medium, 2018 ↩︎